In this short article, you learn the most important concepts in deep learning Underfitting and Overfitting. This is an end-to-end guide so you don’t need any previous knowledge to understand this concept. If you have questions comment now below.

Keep reading !💥

This is the most important concept in deep learning (underfitting, overfitting). I called this bug 💩in our model. It happens all the time when building deep learning models. In my opinion is good because, when I find some bug in my model I learn something new.🔰

What Is Underfitting

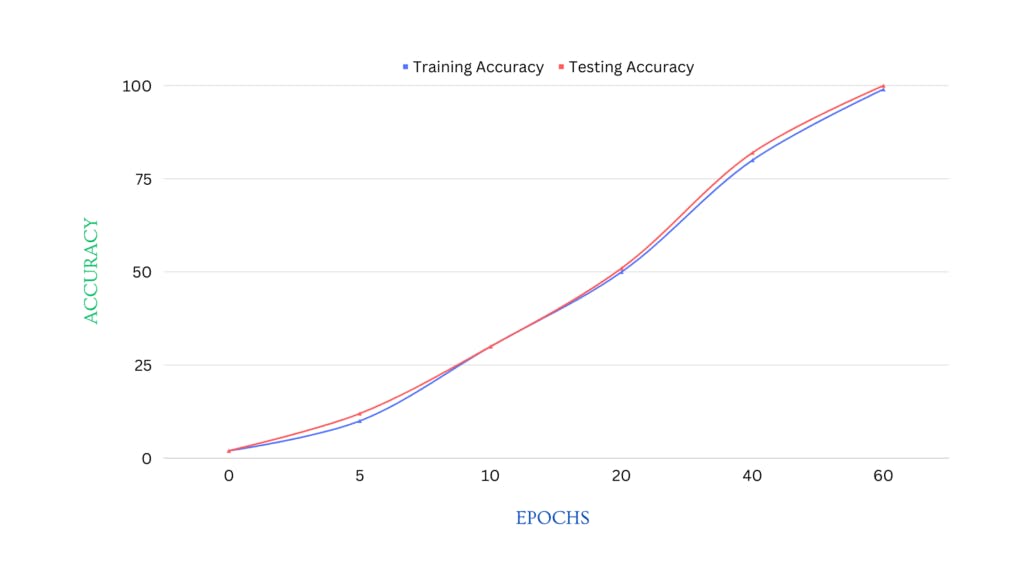

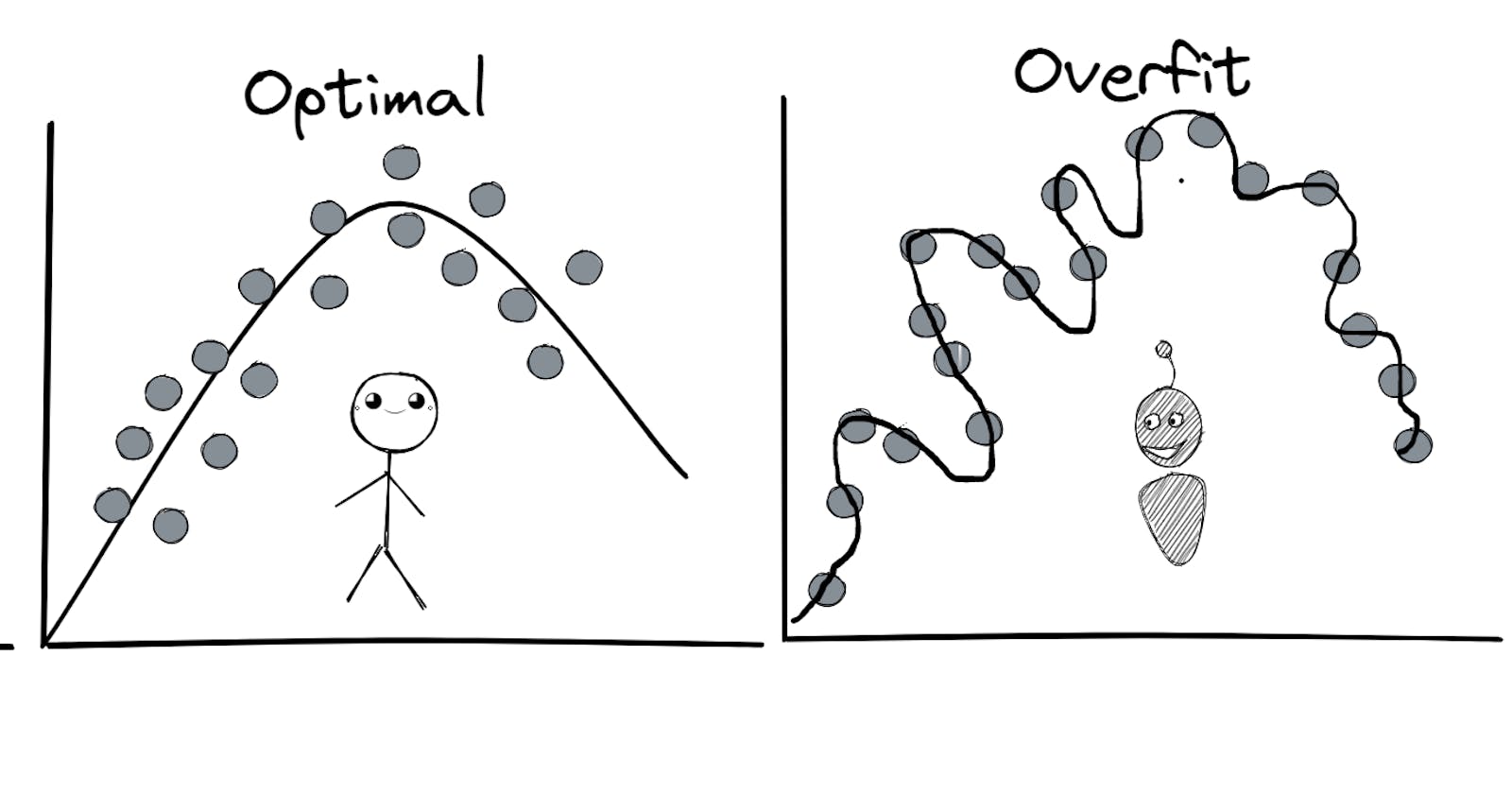

Underfitting means, the model you build is so simple fails to learn ‘training data’. One example is you use a single perceptron to classify (dog, cat ). Let’s see the below image so you understand better.

Note ❌— Underfitting is a simple term model don’t learn features in training data.

What Is Overfitting

If you understand Underfitting then Overfitting is very easy to understand because it’s the opposite thing. Let me explain first!

Overfitting happens when you build a too-complex model instead of learning training data it’s actually memorize training data. This time model performs very well on the training data but fails to predict new data it hasn’t seen before. 😭

I hope you understand the meaning of these two things in deep learning, if you don’t not a problem because I share with you one story so you understand this concept fast and remember a lifetime. 📜

One Boy Two Thing Happen – Underfitting & Overfitting

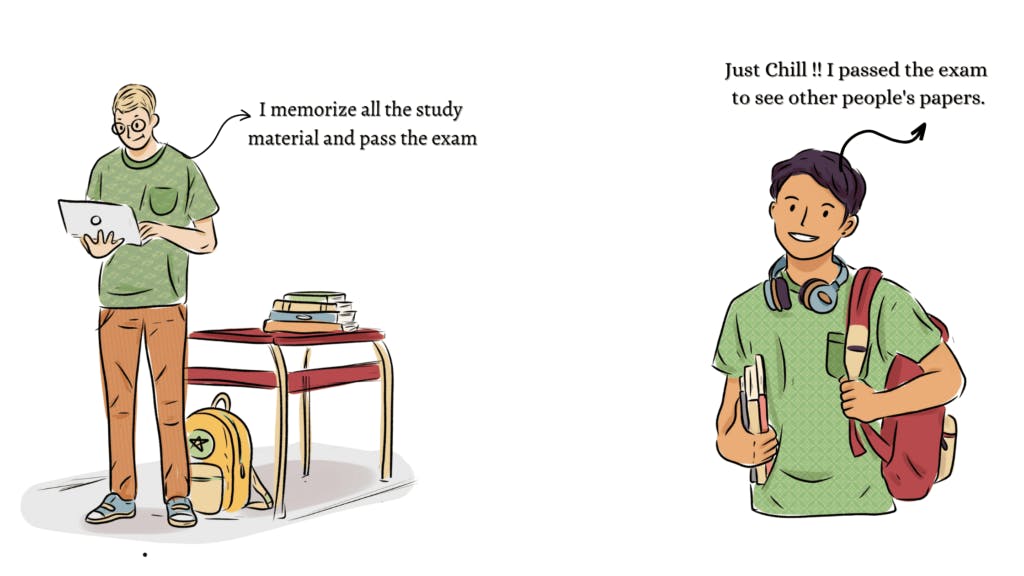

This story starts with one ‘Student’ 👨🏻🎓👩🏻🎓 studying for an exam💯.

Underfitting happens — When a student doesn’t study enough so fails to the exam.😭

Overfitting happens — The same student this time memorizes the study material🧾 without understanding the concept. And this time he pass the exam but, when a teacher asked outside of the exam paper this student don’t have answers because he memorize the answers in the studying material not understand the concept.

- Example💥— Same thing happens today most of all people come to deep learning focus on learning frameworks ( TensorFlow, PyTorch, Jax ) not the concept of Deep learning so when a new framework comes, for this type of people hard to learn another framework.

I hope you understand these two concepts at this moment. If not, don’t worry one more time.

Note🔥:

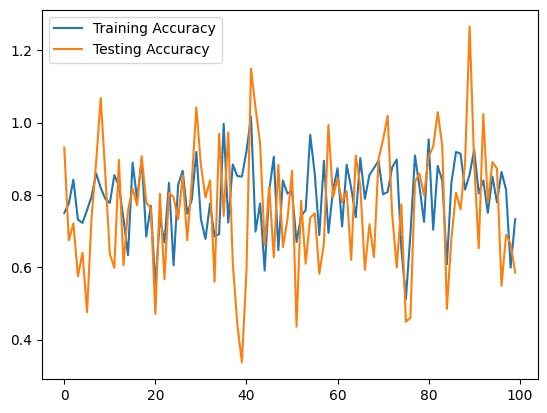

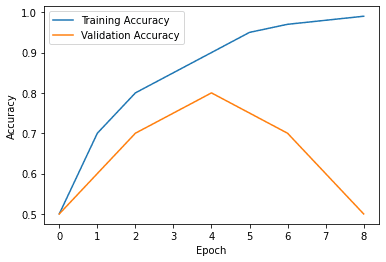

If the model is doing very well on the training dataset but poorly on the validation set, it means ‘Overfitting’. Here is the example remember, if the training error is 1% and ‘validaton_error’ 10%…

Or the same thing the opposite model performs badly on the training set it’s called ‘Underfitting’. For example if the train_error is 24% and val_error is 25%

One Image One Model Change The World

Building a model is a very long process but it makes me happy all the time. Because I know making a good brain is not easy but hope is important. In the example below I will show you which type of model is important.