I am very excited about you making a great decision to learn Deep Learning and Computer Vision. In this article, you learn all about how computer vision systems work, why they are important in today’s world, and more. After reading this article you are able to understand this field completely, this is my promise to you.

Now let’s get started, Learning the most existing field in 21st-century Deep learning With Computer Vision.

Keep reading !🔥

What Is Computer Vision

In last recent years, Computer Vision has been advancing rapidly thanks to the great contribution of AI and Deep learning techniques. Today, cars drive from point A to B without having a driver, and facial recognition systems can use locks to unlock an office or home door. Machines detect Cancer patients faster than humans. This is a very existing achievement for humans thanks to computer vision. One great man said when you don’t see clearly, you do not work properly!

Let’s understand what is the meaning of Computer Vision or short-form CV.

Computer Vision is the eye of Artificial Intelligence, I know you think I am joking😜, no it’s the real truth. Computer Vision is the field we humans teach machines to understand our visual world. See the example below so you understand better.

Suppose you build an application to predict whether someone is Covid or Not! — This is a great job ( Congrats 👏🏻 ). Here is your job as a computer vision developer

Collect lots of visual Covid patient data.

Preprocessing this data.

Train this data computer Vision model.

Test your prediction. ( It’s done, congrats you build a covid-19 detection model that predicts whether someone is patient or not.

One thing you notice? you teach machine visual images.

$$📸🤖$$

A key point to remember 💥— Computer vision is the field humans teach machines to understand the visual world. In humans, we have many sensors to understand our world [sound, smell, and so on… It is similar to AI and is just one way to understand our visual world.

What Is Visual Perception

Visual perception is the act of observing a pattern and object through sight or visual patterns. Understand this Tesla self-driving Car as an example — Visual perception means understanding the surrounding object and specific details — detecting traffic signs 🚦, maintaining car speed condition of the road, where other car drivers, and what is the road name❓. We are not just looking to capture the surrounding environment. We are trying to build a computer vision system that can understand our environment through visual input.

What Is Vision Systems

In the past few decades, people believe in traditional image processing techniques a Computer Vision but this is inaccurate. A machine processing an image differently from a machine understands an image’s content, which is challenging. The computer vision goal is to replicate human visual perception.

It involves teaching computers to identify and process images in the same way that humans do. Computer vision allows computers to identify and process images in the same way that humans do. It helps machines recognize objects, faces, scenes, and more in visual media such as images and videos. Image Processing is a very important part of Computer Vision.

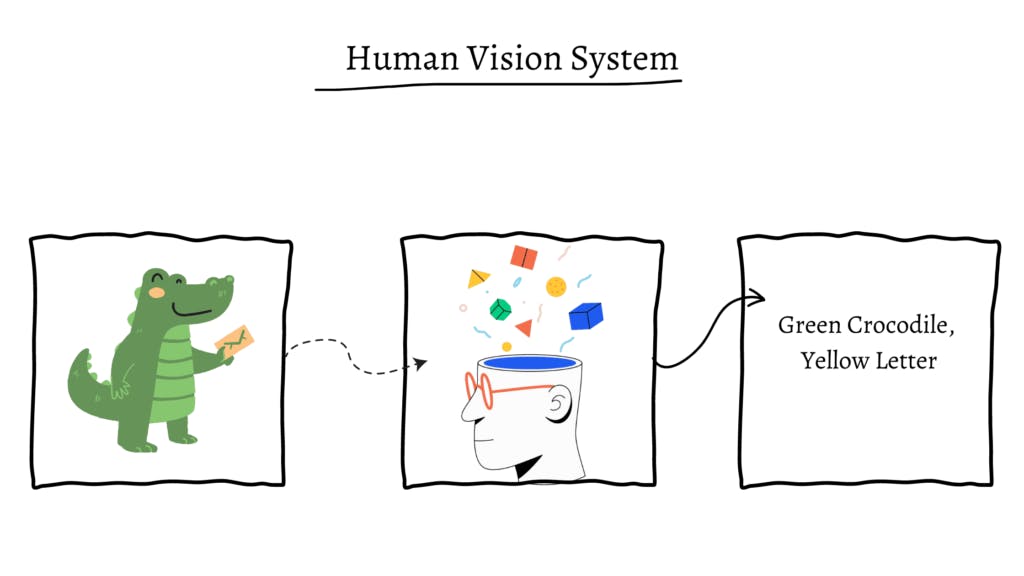

How Human Vision Systems Work

If you understand computer vision systems, first understand how human vision systems work.

Suppose you see images that contain many Pandas🐼 and your brain quickl

y classified this image. But this classification process is not developed one day, after many years of practice then your brain achieves this type of classification. Understand this example 💥— One day you sitting on a chair and your friend come and shows you one picture of a panda. You don’t know what pandas look like because you see this first time in your life. After many images you see and your brain captures this information. Then you quickly classified what Pandas 🐼 look like. At this time your friend shows another picture that contains Tiger and Panda. Your brain sees this image, analyzing object features [ It’s black and white skin, fat, big mouth, and so on… ] and you say it’s Pandas. You can train your mind so many animal images like [cats, dogs, monkeys, and elephants] otherwise you can train your brain to identify any object. The same is computers, but this time it’s hard to teach computers what an object is. Because we humans understand objects fast but a computer on other hand saw a thousand or some more complex cases of millions of images to need to learn to identify images.

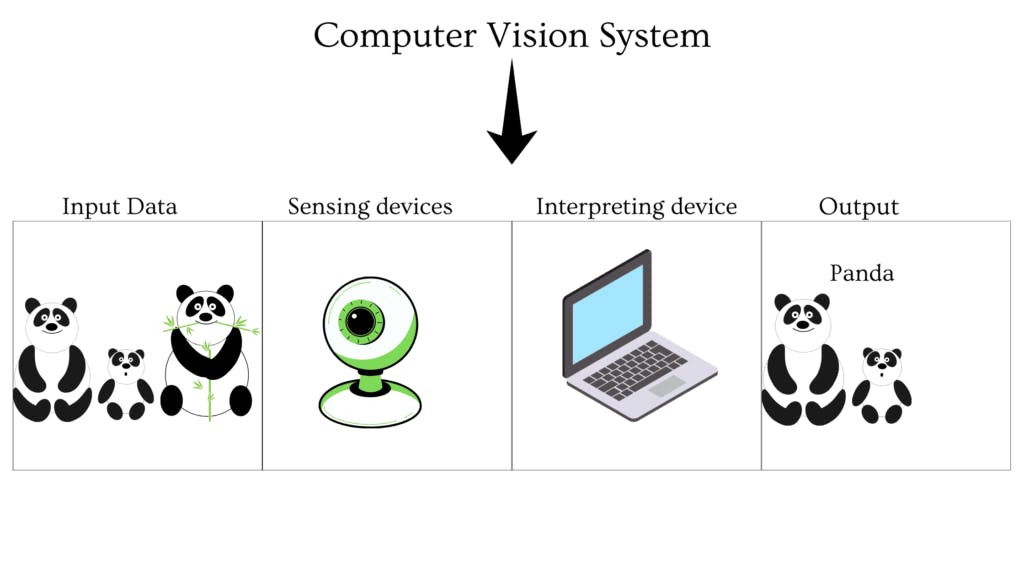

Artificial Intelligence Vision Systems

In recent, years Scientists have inspired our human vision system and they try to implement this in machine vision. But they found copy visual system in the machine that needs two main components!

The sensing device collects image input data.

Powerful deep learning algorithm that classifies images.

Sensing Devices

Understand the autonomous vehicle example again. The main goal of self-driving cars 🚗, is to move from point A to point B safely and timely manner. To achieve this task we need some sensor devices in this car to capture visual input information surrounding. Here are some of the sensor devices that use any computer vision application Camera, Radar, X-ray, CT scan, Lidar so on…

Here are some of the sensing devices used in self-driving cars! 👇🏻

Lidar is a radar technique used to create a high-resolution 3D map of the surrounding area.

The camera can see street signs and markings.

Radar measure distance.

Can Machine Learning Achieve Better Performance Than The Human Brain

If you asked this question 10 years ago I say it’s probably not. But today it’s possible to achieve better performance in humans, thanks to the great achievement of deep learning techniques.

Suppose I give a book that contains 10,000 dog images🐶. And your job is to learn and tell me the breed name of each dog.

Here is a question for you? ❓

How much time need to learn? ⏱️

How much accuracy, do you give me after your learning is complete? 💯

In neural networks, it takes some hours to train a model and achieve more than 95% accuracy.

I am not saying that computer vision replaces human capability. Human is more powerful than machine but sometimes accepting something is important.

Applications Of Computer Vision

At this time you are a basic understanding of how computer vision system work. And this time see where computer vision systems work in the real world. 🌏 It gives why you learn in this field. I believe that need achieves something. 🎯 Here are some of the lists below where the computer vision system works!

Image Classification

Image classification is a task where you have label dataset and train your deep neural network and classify the image. Some of the examples are.

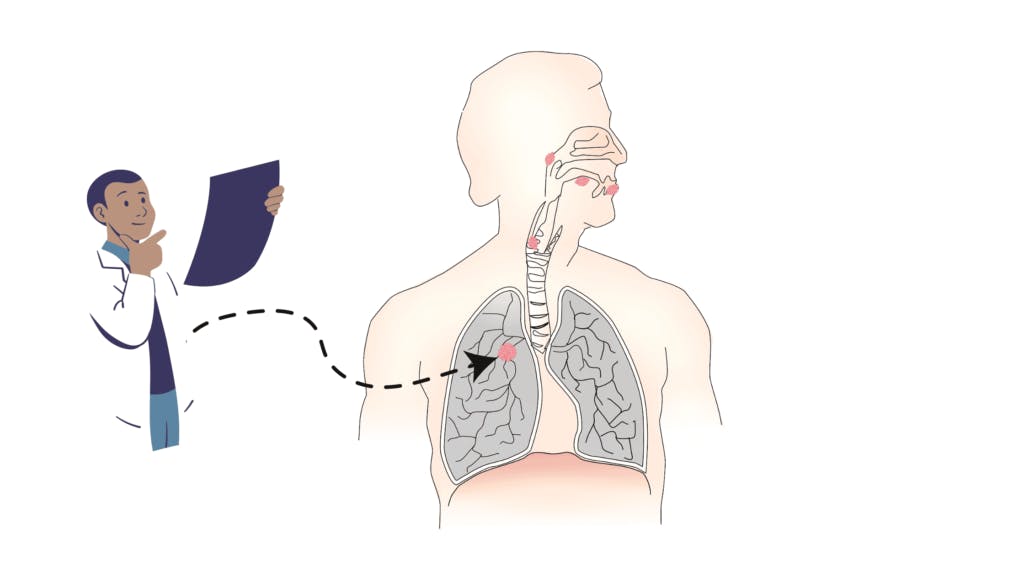

Lung cancer diagnosis — This is a very dangerous disease in health. It’s hard to spot the human eye and that cause when someone has a doctor only finds the last or middle stage of this cancer. And that cause this patient to die. So many companies invested their money in building computer vision applications to detect this type of disease. Some companies are researching this field – Google, Nvidia, and Microsoft.

Object Detection And Localization

It’s your dream to create a computer vision model, not just classify but also detect objects in the image that is called object detection. Some of the best object detection models have in this time — are Yolo ( you only look once ), SSD ( single-shot detector ), and faster R-CNN.

Some of the most popular use cases!

Shopping Store Use — Customer Experience😀😡

Road use detects a person’s behavior.

Generating Art

These days many inspiration arts create AI models within seconds thanks to the GANs.

You know😵— In October 2018 AI create one printing Name is The Portrait of Edmond Belamy sold for $432,500.

Some of the best AI art models have

Midjourney

DALL-E

Stable Diffusion

Here is an example below, AI generates an image.

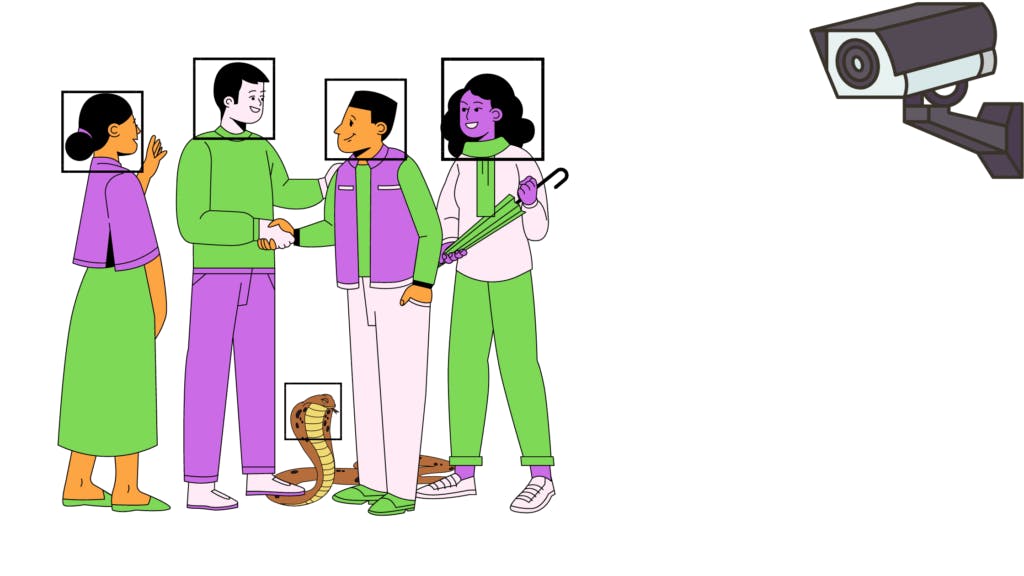

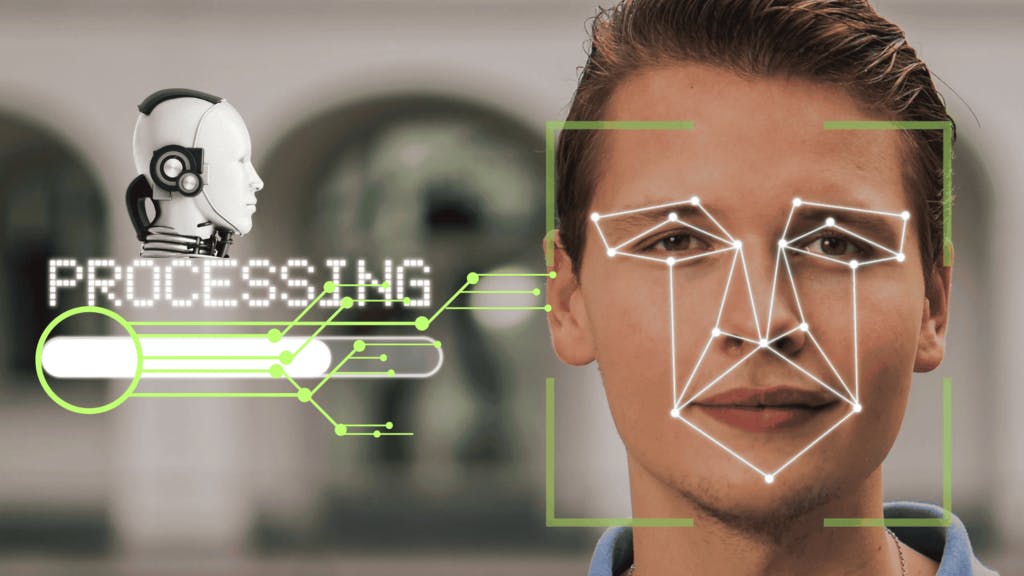

Face Recognition

The process of face recognition is used to precisely identify or label an image of an individual. And it has various practical applications in our daily lives, such as searching for celebrities online and automatically tagging friends and family in pictures. This technology is a type of fine-grained classification. The Famous Handbook of Face Recognition divides an FR system into two modes face identification and face verification.

Face identification — a query face image is compared to all template images in a database to determine the identity of the query face through one-to-many matches. On the other hand,

Face verification — involves a one-to-one match where a query face image is compared to a template face image, which is claiming a particular identity.

Image Recommendation System

Image recommendation is a task where your neural network model provides a similar image based on query person search. This type of technology is used by e-commerce, social media, and video platform website.

Suppose you search Amazon.com 🔍 new book on deep learning computer vision. You can see many books comes.

You click one book 📓

Then you see many books suggested by Amazon based on what you search for or previous history in your data. Because they used an image recommendation system.

Another example is 🎬— YouTube, Netflix recommends video what you watch next. I hope you one day build this type of system and share the world.⏱️

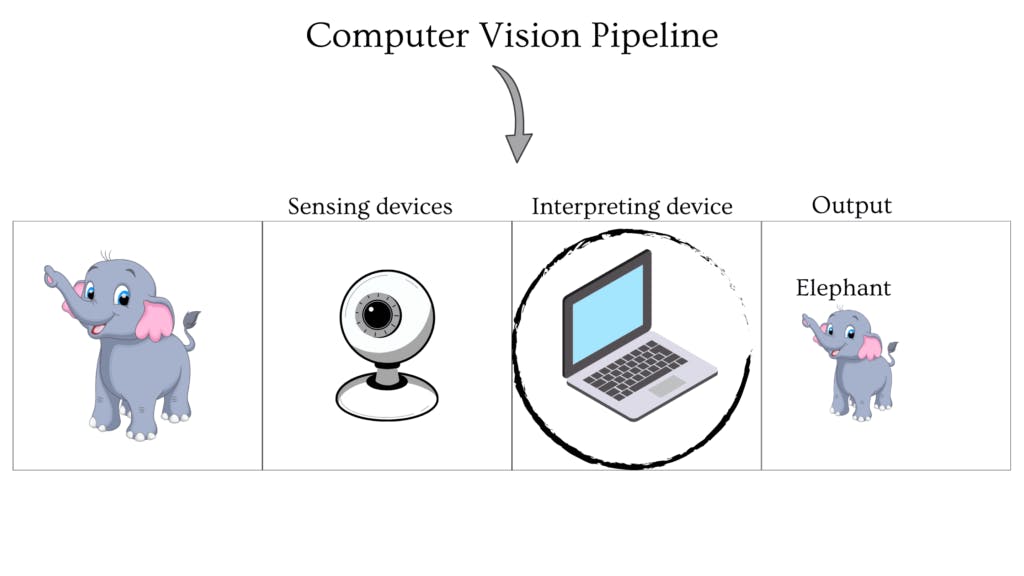

Computer Vision Pipeline

In this section, you learn how computer process and understand an image. As you know machines use sensing devices to collect input but the question is? how they understand our visual world.

Keep reading! 💥

I know this article is very long. But keep in mind sitting and learning it’s different🎓. What you choose it’s make difference. ☀️ Computer vision systems are used in the very sequential step to process and analyze image data. And this step is called the Computer vision pipeline. See the image below so you understand better this pipeline.

Let’s apply this pipeline in the image classification example. Suppose you have the image Dog and you build a model that predicts the probability of the object following this class —dog🐕, elephent🐘 and snake🐍.

Definition of image classification — In machine learning image classification is an algorithm that takes input and gives some output based on what training time you provide a label( class ), it’s so simple.

Classfication ❓= Input ( Label data ) + Machine learn = Output 🤖

Let’s see how a computer vision pipeline work in a classification problem. 🪜

A Computer receives visual input from an imaging device like a camera. Visual means digital image data.

Don’t be cofious💥 — Video is a sequence of images that play over time.

Now collecting input data is time to do some preprocessing steps. Some of the common preprocessing steps include —

Resizing image ( 420 x 620 )form ( 720 x 920 )

Blurring, rotation, cropping, etc.

Changing the shape of the image.

Changing the color of the image from GrayScale to Color. Image preprocessing is many steps included. In the upcoming article, I write this topic!

Extract features in this image dataset. Features are very helpful to identify the object of the image. In this case, our features are [ Color of the dog, height, dog sound, breeds name so on! 🐕🦺

Train these features in our classification model. And our model 🤖 learn these features.

And last and final step is to Check our model prediction. 💯

If everything is fine then we deploy our model in the real world.

One thing remembers 💥— Always check model performance, it’s any issue you find to improve.

Image Input

In the Computer Vision application, we work on images or video data. So in this section, you learn a little bit about grayScale or color image difference.

Image As Functions

Every image can be represented as a function f( x, y ) of two variables X and Y it’s a defined 2-dimension area. The image is made of a grid of pixels, the pixel is the raw building block of any image.

Let’s see one example, so you understand more.

Note 💥 — In this time I write some code if you don’t know it’s totally fine.

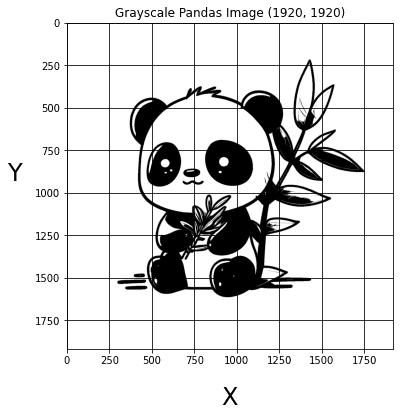

import matplotlib.pyplot as plt

import cv2

pandas_image = cv2.imread('/content/drive/MyDrive/panda-7665674_1920.jpg',cv2.IMREAD_GRAYSCALE )

(thresh, im_bw) = cv2.threshold(pandas_image, 128, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

fig, axis = plt.subplots(figsize = (10, 6))

plt.title(f"Grayscale Pandas Image {im_bw.shape}")

plt.xlabel("X",labelpad=20,size=24)

plt.ylabel("Y",labelpad=20,size=24,rotation='horizontal')

plt.imshow(im_bw, cmap='gray')

plt.grid(color='black')

print(pandas_image.shape)

Note 💥— This is a grayScale panda image size is 1920×1920. This means the dimension of the image is 1920 pixels in width and 1920 in height. The X-axis goes from 0 to 1750 and the y-axis from 0 to 1750. Overall this image has 512(1920 x 1920) pixels.

In this panda grayScale image, each pixel contains a value that represents the intensity of light on that specific pixel. Every pixel value range from 0 to 255. This means 0 represents very dark pixels ( black), 255 is very bright(white), and the values between 0 – 255 are grayScale.

Remember 🔥

0 black, 255 white, 0-255 gray!

GrayScalce image one color channel.

Computer see images matrix.

Color Images

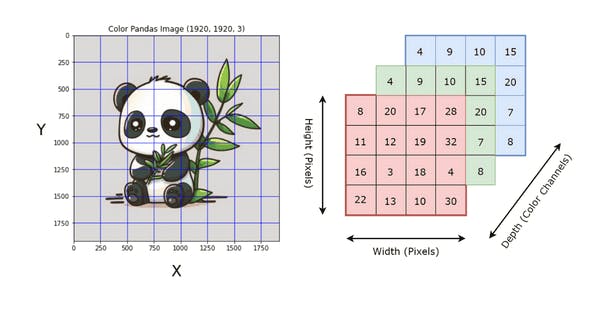

But on the other hand, a color image is different because color image has 3 color channels. The most popular color channel is RGB ( red, green blue) and HSV( hue, saturation, value ). Let’s write some code and see a visual way so you understand better.

import matplotlib.pyplot as plt

import cv2

pandas_image = cv2.imread('/content/drive/MyDrive/panda-7665674_1920.jpg',cv2.IMREAD_COLOR)

pandas_image = cv2.cvtColor(pandas_image,cv2.COLOR_BGR2RGB)

fig, axis = plt.subplots(figsize = (10, 6))

plt.title(f"Color Pandas Image {pandas_image.shape}")

plt.xlabel("X",labelpad=20,size=24)

plt.ylabel("Y",labelpad=20,size=24,rotation='horizontal')

plt.imshow(pandas_image)

plt.grid(color='blue')

print(pandas_image.shape)

Note 💥 — This is a color image of a panda with 1920 height 1920 width, and 3 color channels.

Question ❓— How Do Computers See Color?

Computers see images as metrics. A grayscale image has a 2D matrix and a Color image has a 3 matrix. Grayscale image is only one color channel ( Gray ). Or color images have 3 color channels ( red, green, and blue 🔴🟢🔵 ).

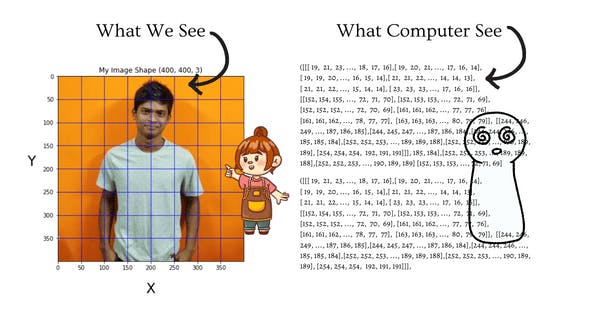

How Computers See Images

When you look at any image, you see objects, colors so on. But the computer sees images as a number. In Computer science machines understand numbers and we play these numbers. And make a powerful machine.

# importing modules

import numpy as np

import matplotlib.pyplot as plt

# How computer see images

my_image = cv2.imread('/content/drive/MyDrive/my_image.jpg',cv2.IMREAD_COLOR)

my_image = cv2.cvtColor(my_image,cv2.COLOR_BGR2RGB)

fig, axis = plt.subplots(figsize = (10, 6))

plt.title(f"My Image Shape {my_image.shape}")

plt.xlabel("X",labelpad=20,size=24)

plt.ylabel("Y",labelpad=20,size=24,rotation='horizontal')

plt.grid(color='blue')

plt.imshow(my_image,cmap='gray');

What Is The Purpose Of Image Preprocessing?

When coming to computer vision you spend your most of time Preprocessing data. Because in the real world, your data is not clean and model friendly. Deep learning is all about data, without data you don’t build anything but real-world data you have not perfect, 99% of the time you work with messy data. Everything is not perfect but we make perfect that’s why we write code!

Image preprocessing is an important task in Computer vision because machines don’t know objects. So when you train your model, the first job is to prepare data that your machine can understand fast.

Some of the image preprocessing technique is involved when you work building your computer vision model — resizing all image the same size, converting color to grayscale or other colors. One thing remembers image preprocessing is a technique that makes our data clean and prepared before building a computer vision model. Image preprocessing is a very helpful technique because helps make our data prepare building model.

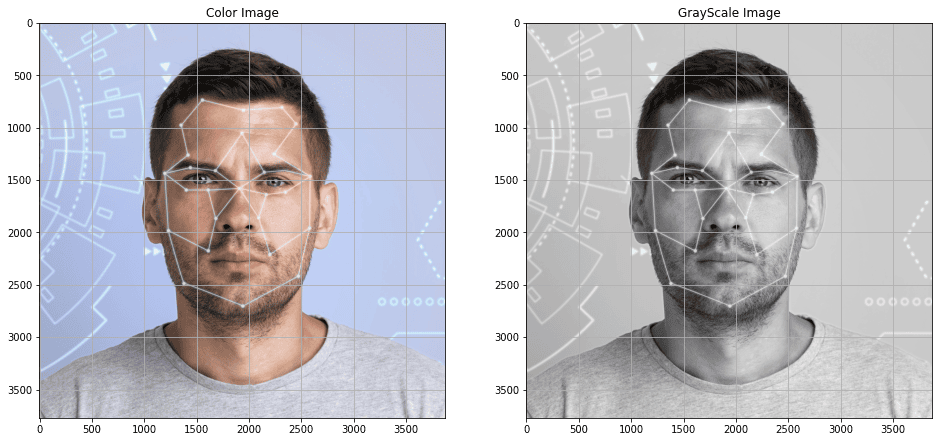

Advantages Of Converting Images To Grayscale

GrayScale images can be used in many computer vision problems, where color information is not important. Here is a real-life example of using GrayScale images in computer vision.

Face recognition — This is a popular computer vision problem that involves identifying and verifying people based on their facial features🤓🧐. Grayscale images are frequently used in this problem because they provide sufficient information about the person’s facial structure, such as the shape of their eyes, nose, mouth, and other features. In addition, grayscale images can decrease the computational fee of the face recognition algorithm compared to using color images.

face = cv2.imread("/content/portrait-man-face-scann.jpg")

gray_face = cv2.imread('/content/portrait-man-face-scann.jpg',cv2.IMREAD_GRAYSCALE)

fig, axes = plt.subplots(ncols=2,figsize=(16,8))

axes[0].imshow(face[:,:,::-1])

axes[0].grid()

axes[0].set_title("Color Image")

axes[1].imshow(gray_face,cmap='gray')

axes[1].grid()

axes[1].set_title("GrayScale Image")

plt.show();

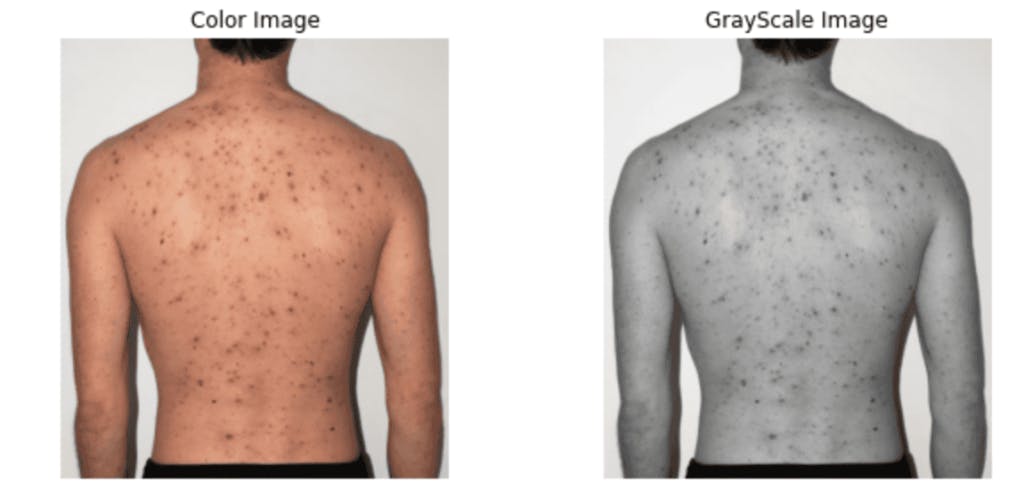

When Is Color Important

Converting color images to grayscale is very helpful for some computer vision problems but for some problems color images are very important. Suppose you build a model that predicts skin cancer 🦠( red rashes ) that time needs. Color images provide more information compared to grayScale but knowing which images used which problem is the key. When you use grayscale image skin cancer detection problems that time very hard to predict compared to using color images.

Let’s see an example! 🔥

import matplotlib.pyplot as plt

import cv2

cancer_img = cv2.imread("cancer_image_for_computer_vision.jpg",cv2.IMREAD_COLOR)

gray_cancer_img = cv2.cvtColor(cancer_img, cv2.COLOR_BGR2GRAY)

rows, columns = 2,2

fig = plt.figure(figsize=(10,10))

# Adds a subplot at the 1st position

fig.add_subplot(rows, columns, 1)

# showing image

plt.imshow(cancer_img[:,:,::-1])

plt.axis('off')

plt.title("Color Image")

# Adds a subplot at the 2nd position

fig.add_subplot(rows, columns, 2)

# showing image

plt.imshow(gray_cancer_img,cmap='gray')

plt.axis('off')

plt.title("GrayScale Image");

What Is The Purpose Of Feature Extraction

Feature extraction is one of the most important components in the CV pipeline. In fact, you spend most of your time extracting features that clearly identify objects in the image. Let’s first understand what a feature is —?

Features are individual properties that identify an object in the image. Suppose you build a model that predicts the price of the house, so this time your feature variable is — [square_foot, number_of_rooms, bathrooms, and so on…. 🏠].

Features are just small pieces of information that identify objects. It’s a very important task in deep learning.

Summary of this article —

Computer vision is a subfield of Artificial Intelligence.

Humans teach machines to understand the visual world.

A color image is very important but sometime GrayScale images are beneficial, depending on which problem we are working on.

Features are small pieces of information that understand the object of the image.

Image preprocessing is a very useful technique to help clean and prepare data before working on the model.

Computer see images metrics.

The goal of computer vision solve problems, not create!